Labs Over Fabs: Support the Future, Not the Past

How the US Should Invest in the Future of Semiconductors

For the past six months, I’ve been working with Chris Miller (Tufts) and Danny Crichton (TechCrunch) on a Korea Foundation-funded exploration of issues facing the US semiconductor industry. For the next three days, I’ll be publishing selections of our report on the newsletter. Today’s section focuses on lessons learned from US chip policy in the 1980s that underscore how the US needs to invest in the technology of the future, as opposed to only supporting fab construction.

See here for the full report complete with footnotes and a fancy pdf layout if you’d prefer that format.

We’ll also be doing a zoom event with my co-authors this Thursday at 9 AM EST. Feel free to register here.

Fears that the U.S. will lose its position in chip design—and the reality that it is no longer a leader in chip manufacturing—have triggered discussion among U.S. policymakers that the domestic semiconductor industry needs help—and fast. The past several years have seen a bevy of rushed proposals from policymakers, researchers, and the chip industry, ranging from tens of billions of dollars of new subsidies to further trade restrictions to new export controls and sanctions on Chinese competitors.

Our view is that many of these proposals define America’s problems in chips far too narrowly; they are focused on resuscitating the past glory of the industry rather than investing in areas where America can lead the future. Moreover, they are designed to close the industry off to competitors rather than advocating open competition—and ensuring America is the most competitive player.

First, much discussion about investing in chips has focused on ensuring the dominance of the United States in designing and resuscitating U.S. manufacturing of “leading edge” chips—chips that use the best manufacturing process in fabrication. Yet, there isn’t one leading edge, but rather many different edges. Consider different types of chips. Some are digital that provide the computing power in servers and iPhones. Others are analog, such as those managing the power supply in devices. Chips are devised using different instruction set architectures, with PCs and most data centers running on the x86 architecture while UK chip designer Arm’s architecture is mainly used on mobile devices.

Chips that power data centers can cost thousands of dollars, while the simplest microcontrollers can cost pennies. Some chips, including many types of memory chips, can be plugged into different devices. Others, like those designed by Tesla, only work in the company’s cars. Then, there are burgeoning frontiers like quantum computing that use entirely different properties to construct a new model of computer processing.

Rather than designing a policy for one “leading edge,” defined solely by the manufacturing of chips, policymakers need to see the semiconductor industry as a diverse collection of different technologies, each with key uses in the economy.

America’s strategy should be to support a deep and resilient chip ecosystem encompassing many different areas of the industry.

This ecosystem-based perspective is absent from most policy discussions around semiconductors, which focus primarily on market interventions like business incentives and trade restrictions. Yet, America’s leading position in semiconductors starts with its educational and research institutions and, by extension, the high skill of its workforce. A more strategic and comprehensive plan for semiconductors must look broadly at the industry as a whole and ensure that the diverse talent and research required for sustaining America’s competitive advantages are fully supported.

The semiconductor industry is going through a generational transition from closed-source technologies to open-source models, which will empower a more diverse, specialized, and competitive chip industry. U.S. policymakers have been divided over this transition, with widespread fear that open-source technology will surrender America’s competitive advantages. Open-source will force some chip firms to change their business models. But if approached strategically it could also catalyze a new generation of semiconductor innovation. Rather than trying to defend incumbents who fear open-source technologies and are trying to defend existing intellectual property, the U.S. should ask how it can encourage adoption of open-source chip architectures to drive down cost, increase security, and catalyze innovation.

America’s actions to support its semiconductor industry need to focus not on protecting the present-day leaders but on fostering a base of innovation that will springboard future leaders.

This report explores three sections how the U.S. can design a more comprehensive policy for the semiconductor industry by

Supporting next-generation research into semiconductor technology

Improving the pipeline of engineers and talent into the semiconductor industry at all phases

Engaging with open-source technologies to make America’s industry the most globally competitive

Focus on Next Generation Technologies

How can the U.S. government support next generation semiconductor technologies? Competitors from South Korea, Taiwan, and—increasingly—China have taken market share in the manufacturing of chips. Some analysts have suggested that the U.S. market position is under threat. The U.S. government is now mobilizing to support the microelectronics industry. China’s self-sufficiency drive is something new—and worrisome—for the chip industry, but this isn’t the first time that the United States has faced a challenge for the dominant position in the global chip industry. This section will explore how the U.S. semiconductor sector faced international competition in the past, notably from Japanese rivals in the 1980s. The strategies that were successful then in retaining industry leadership hold lessons for today. Now that the U.S. government again considers semiconductors as a strategic technology, the industry’s history provides useful templates for assessing efforts to support chip technology.

From Japanese Competition in the 1980s to Chinese Competition in the 2020s

In recent years, China’s government has launched multiple initiatives to catch up in semiconductor technology. It is pouring billions of dollars annually into its chip firms. Programs like Made in China 2025 identified microelectronics as a priority industry for reducing China’s foreign reliance. According to Organisation for Economic Co-operation and Development (OECD) studies, China provides substantially higher subsidies than any other country. subsidies The Trump Administration struck back against China’s chip efforts by imposing export controls that prevent Huawei and its HiSilicon chip design unit from contracting with almost any chip manufacturer to produce its chips. In addition, the U.S. has restricted the ability of SMIC, China’s biggest manufacturer of chips, from buying advanced equipment from abroad. In response, Beijing has redoubled efforts to subsidize domestic chip production and design. Most studies expect China’s share of the semiconductor manufacturing sector to increase over the coming decade, though China currently can’t produce the most advanced chips with the smallest feature sizes and is unlikely to do so in coming years.

Yet today’s competition with China isn’t the first time that the United States has faced major lower cost competitors. In the 1980s, Japanese companies learned to produce DRAM memory chips—which at the time were the mainstay of the semiconductor market—at higher quality and lower price than American companies. Japanese firms first drew on practices pioneered by U.S. chip producers, but improved them substantially, driving down the rate of manufacturing defects. U.S. chip companies cried foul, accusing Japan’s government of subsidizing semiconductor production and stealing U.S. chip secrets. There were credible instances of Japan doing both, but it was undeniable that U.S. firms lagged Japan in quality—which American customers pointed out when choosing to buy Japanese products.

U.S. chip firms in the 1980s demanded government subsidies and the imposition of restrictions on Japanese imports. After intense lobbying by semiconductor executives, they got both, convincing even the generally free-market Reagan administration to support protectionist measures to help the industry. Armed with the threat of tariffs, the Reagan administration forced Japan to adopt quotas and price floors for DRAMs and EPROMs, two types of memory chips.

But in hindsight, it isn’t obvious that those actions made much difference. All but one American DRAM producer either refocused on other markets or went bankrupt. Indeed, cutthroat competition in the DRAM market eventually drove all the Japanese firms out of the market, as they were supplanted by even lower-cost rivals from South Korea.

These protectionist policies were designed to support American manufacturing of products that already existed, but because of the constant, rapid improvement in computing power described by Moore’s Law, a prediction coined by Intel co-founder Gordon Moore that roughly states that the computing power of chips will double every two years. Because of this rapid rate of technological change, the most advanced semiconductors are often out of date in just a couple of years. Japanese firms, which had a lock on the DRAM market in the 1980s, found themselves facing huge problems by the early 1990s. They were out-innovated by American rivals like Intel.

Japan’s strategy of borrowing huge sums to build fabs—reminiscent of China’s state support today—caused massive overexpansion that dragged down Japanese firms in the 1990s when loans came due.

And the focus on existing technologies left Japan vulnerable to new competitors from South Korea, which soon learned how to produce DRAM as efficiently and at even lower cost. Today, Japan remains a major player in certain parts of the semiconductor supply chain, but it is far from the seemingly dominant role of the late 1980s.

The U.S. chip industry fended off the Japanese challenge not thanks to protectionism, but innovation. Two examples demonstrate this: Intel’s pivot away from DRAM and the origins of electronic design automation (EDA) tools. Start with Intel, which was one of America’s leading DRAM producers in the early 1980s. It suffered immensely from competitive Japanese products that were low cost and high quality. Rather than trying to compete head-on, Intel left the memory market and reoriented its business model toward high-value chips, winning the earliest contract to produce chips for the IBM personal computer. Rather than rely on government subsidies or protectionist policies, Intel pivoted its business, drawing on America’s existing pool of chip experts, deep capital markets, and a business culture that prioritized innovation over defending incumbent producers.

This pivot made Intel the world’s leading provider of PC chips, earning it rich profits as PCs became mainstream in the 1990s and 2000s. It was the world’s largest chip firm for much of the period. By contrast, U.S. firms that stayed in the DRAM business and prayed that protectionism would save them mostly went bankrupt. Those that invented new products and new business models did better. DRAMs were the most common type of chip used—but they were far from the most profitable. What made Intel so successful was that it identified a high-margin part of the market and in partnership with Microsoft, which built the software on which PCs run, built an ecosystem around it. The innovation in Intel’s business model was as important as the innovation in its research labs.

A shining example of American success in innovation comes from the EDA tools market.

Today’s chips have many millions or billions of microscopic transistors, each of which open and close electric currents to produce the 1s and 0s that make computing possible. Chips with so many tiny components can’t be designed by hand, nor can each transistor be tested one-by-one. Instead, EDA software tools model how chips will function and automate the layout of transistors and other components of a chip’s design. Three U.S.-based companies dominate the EDA market: Cadence, Synopsys, and Mentor, the last of which is owned by Germany’s Siemens. This market share gives the United States immense power. Washington’s restrictions on Huawei have focused in part on cutting off its chip design arm from EDA tools, which makes it all-but-impossible to design advanced chips. No other country has capabilities comparable to America’s in chip design software. This provides a competitive business advantage and also a useful geopolitical tool.

How did the U.S. develop this dominant position in chip design software? In 1982, under the auspices of the Semiconductor Research Corporation (SRC), a research consortium backed by government and industry, centers of excellence in computer-aided design were established at Carnegie Mellon University and University of California, Berkeley. SRC poured money into these two universities, spending $34 million at Carnegie Mellon and $54 million at Berkeley over the subsequent years. The result was a flurry of startups producing design software—something that no other country had. Over two decades, most of these startups consolidated into the three firms that dominate the industry today. If it weren’t for the funding of these programs at America’s research universities, the United States might not have been able to impose effective export controls on Huawei today.

Support the Future, Not the Past

As the U.S. government considers how to support semiconductor firms facing competition from China, it should keep these two historical examples in mind. There’s little point in thinking about today’s chips, which will be obsolete in the time it takes Congress to pass legislation affecting them. To succeed, companies not only need advanced technologies, but they also need effective business models—something the government is unlikely to help them with. Given China’s willingness to hand out huge sums to its corporations, the United States is unlikely to win a subsidy arms race. Nor should it try. Much like in the case of Japanese competition, the U.S. semiconductor strategies that have worked in the past have supported research into the technologies of the future, then left it to companies to devise profitable business models.

The first step in such a strategy is to consider how the industry looks today, and where the United States faces potential economic and strategic vulnerabilities. The semiconductor industry is commonly broken down into five parts, each of which is needed to produce a chip:

Chip Design

Electronic Design Automation Software

Semiconductor Manufacturing Equipment

Manufacturing (aka Fabrication)

Testing and Packaging

Of these, the United States is the clear leader in chip design, driven by companies like Qualcomm and AMD in addition to big tech firms like Apple and Google, which in recent years have invested heavily in chip design. EDA software, as mentioned above, is dominated by three U.S.-based firms. Semiconductor manufacturing equipment is produced by a small number of firms, the biggest of which are located in three countries: the United States, Netherlands, and Japan. New U.S. controls on using U.S. technologies to produce certain Chinese chips may benefit competitors in third countries, like Japan, by making it less attractive to purchase U.S. manufacturing equipment. For now, though, it is extraordinarily difficult to create an advanced chip without using American manufacturing equipment. When it comes to chip design, design software, and manufacturing equipment, most experts interviewed for this report expect that the United States will retain its market position in the coming years.

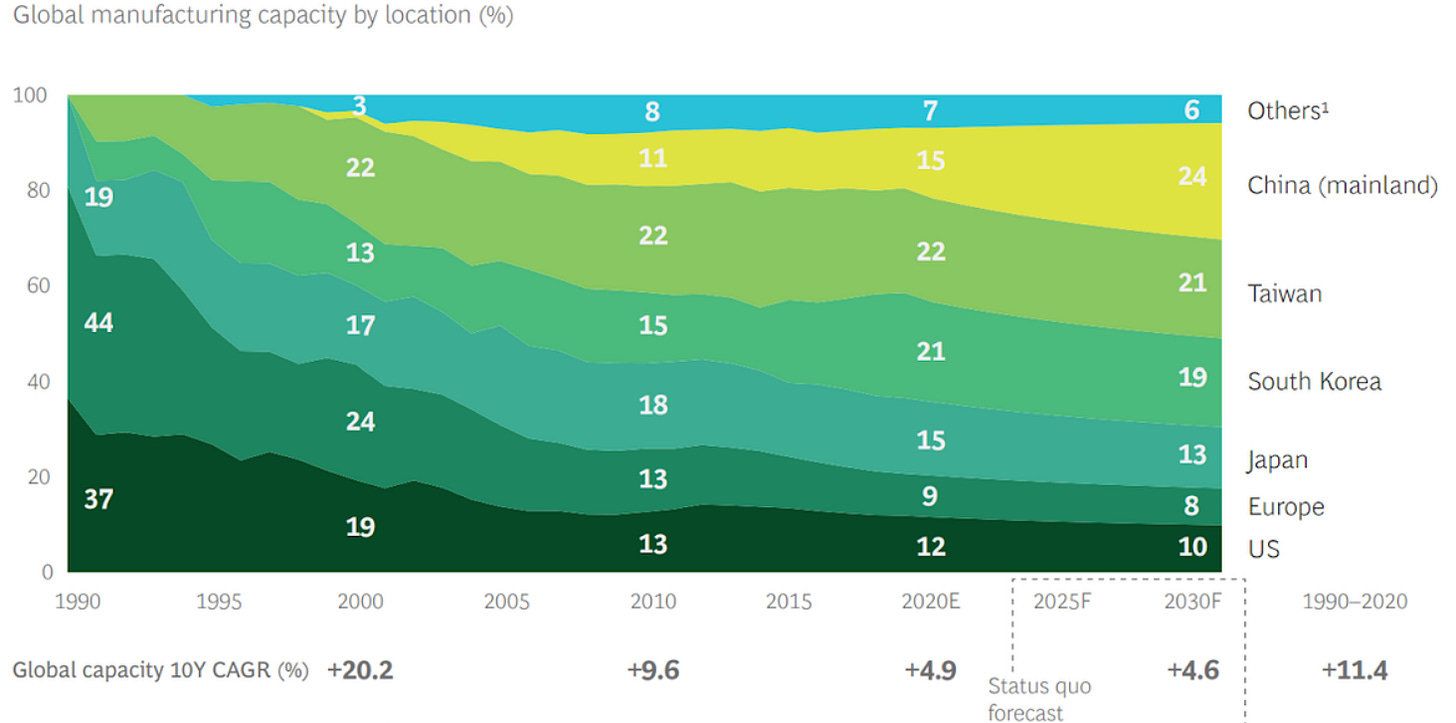

In contrast, America’s market share has waned in the final two segments of the industry: fabrication and testing and packaging. Testing and packaging has been outsourced to low-cost countries for decades, as it was long seen as low value, though this perspective is beginning to change. More important has been the offshoring of fabrication. As recently as 1990, over a third of semiconductors were manufactured in the United States. Today, only 12% are. The key reason, as the figures below suggest, is cost. A study by the Semiconductor Industry Association and the Boston Consulting Group found that, depending on the type of fab, new facilities are around 20% cheaper in South Korea and Taiwan and 30% cheaper in China.

Changes to the Chip Manufacturing Landscape. Source: Semiconductor Industry Association

Source: Antonio Varas, Raj Varadarajan, Jimmy Goodrich, Falan Yinug, “Government Incentives and US Competitiveness in Semiconductor Manufacturing,” Semiconductor Industry Association & Boston Consulting Group, September 2020.

Is the offshoring of manufacturing a problem for the United States?

Some analysts argue that it is not because foreign countries’ subsidization of semiconductor manufacturing has increased the profits of U.S.-based design firms. And there is as much money made in chip design as in manufacturing.

However, the offshoring of manufacturing could have three negative consequences for the United States. First, and perhaps most worrisome, is the risk to electronics supply chains in the event of a massive earthquake or a war in Asia. The most advanced manufacturing today takes place at TSMC’s facilities in Taiwan, which mostly produce chips for smartphones and computers, but which also manufacture electronics for America’s F-35 fighter. Amid tension with China, it is increasingly plausible to imagine scenarios in which American access to Taiwanese manufacturing was severed, imperiling the entire electronics industry. If TSMC’s facilities in Taiwan were knocked offline, it could cause years of delays to computer, data center, and smartphone production.

Second, America’s ability to control China’s access to technology—a tool that Washington has used repeatedly against Chinese tech firms like Huawei in recent years—will be eroded as more manufacturing happens offshore. American export controls are still effective today, given that even foreign manufacturing facilities all need U.S. equipment to function and are therefore subject to U.S. Commerce Department rules. As even more manufacturing moves offshore though, companies may try to replace U.S. technology in their supply chains to avoid U.S. restrictions.

Finally, the shift to offshore production can create a self-reinforcing negative spiral. Offshoring degrades the workforce with the skills needed to invent new production techniques in the United States. Yet, offshoring matters for manufacturing quality, too. In producing semiconductors, quality is measured by “yield,” the share of each silicon wafer that produces functional chips. Many industry experts believe there is an inescapable relationship between volume and yield because the volume of production provides opportunity to learn, eliminate mistakes, and thus improve yield. Some observers argue that this is why Intel, which produces its own chips and which used to have the most advanced manufacturing processes, has fallen behind TSMC, which produces for many companies and thus has far higher production volumes than Intel.

The Challenge of Accurately Targeting Subsidies

These dynamics have led some analysts and officials to call for government support for semiconductor manufacturing. The semiconductor industry is, not surprisingly, in favor. But supporting the chip industry only makes sense if government help has benefits beyond those that accrue to companies getting aid. Some legislation in Congress, however, has proposed broad-based financial support to the construction of fabrication facilities. This is not a recipe for spending money wisely. According to the SIA/BCG study, a new facility for producing logic chips can easily cost $20 billion. A new fab in China, the same study found, is around 30% cheaper. Making up the cost differential completely would require $6 billion in subsidies. Compare that prodigious sum to the tens of millions of dollars of well-placed research grants that seeded the entire EDA industry.

Given that U.S. semiconductor export controls are effective because of America’s monopoly position in the EDA software used to design chips and certain types of equipment used to manufacture them, it would be more useful for America’s foreign policy purposes to support these subsectors rather than subsidizing the construction of new fabs. As for the risk that an earthquake or war in Asia disrupts Taiwanese chip production, it would be smarter to mitigate those risks directly—by better defending Taiwan, for example—rather than trying to duplicate TSMC’s production facilities.

The bigger problem of subsidizing today’s technology is that it will soon be outclassed by something new.

Though there are many chips produced using older processes today, the largest share of revenue goes to the newest processes. Historically, manufacturing processes tend to advance after only two or three years. The cutting edge is always moving forward. This makes government efforts to boost semiconductor manufacturing challenging. Arizona, for example, used a variety of financial incentives to convince TSMC to build a small new local facility. The fab will produce chips at the 5 nanometer node, which is TSMC’s most advanced today. But by the time the facility is in operation, TSMC plans to be producing more advanced chips in Taiwan via its 3 nanometer node. So Arizona will be getting second-best technology.

Focus on Tomorrow’s Technologies

Rather than targeting today’s cutting edge and being left behind by technological progress, the U.S. government should support the development of processes and materials that are not currently in production and that may be too risky for companies to invest in. This is a sphere where the U.S. government has historically played an important role. The Defense Advanced Research Projects Agency (DARPA) played a major role in funding microelectronics since the industry’s earliest days. Some of the first major orders for semiconductors came via the Minuteman II ICBM program and for the Apollo Space Capsule’s guidance computer. The U.S. government has a strong track record in funding next-generation technologies that companies later commercialize.

Focusing on next-generation technologies rather than today’s leading edge is particularly important because it avoids getting trapped by today’s technology paradigms. The example of Intel in the 1980s—abandoning the cheap DRAM market amid Japanese competition and pioneering chips for PCs, a move that made it more valuable than all Japanese chip producers combined—is instructive. Entrepreneurs are always on the look for disruptive technologies. Rather than funding incumbents—which might be on the edge of being disrupted themselves— the U.S. government should push the cutting edge forward by funding basic research and early stage technologies.

Given today’s market structure, it would be very expensive for the United States to move from a 12% to 20% market share in semiconductor fabrication using existing technologies. It would be more strategic to bet on disruptive technologies—exactly the type of technologies that America’s research universities already produce, and which U.S. firms have a track record of commercializing.

Recent decades have seen plenty of disruption in chip design. Existing U.S. government programs are supporting research into advanced packaging technologies, for example. Given America’s reliance on foreign fabs, we should also be funding research into next-generation materials and manufacturing processes that have the potential to disrupt the existing manufacturing paradigm.

Moreover, the process of semiconductor fabrication needs major disruption if it is to continue delivering enhancements in computing power. The International Roadmap for Devices and Systems, the joint industry and academic body that agrees on an annual “roadmap” for the future direction of semiconductor technology, has noted that the process of shrinking transistors is facing new challenges. Very roughly speaking, the number of transistors on a chip is correlated with its computing power. The earliest chips in the 1960s had just a handful of transistors; today, Apple’s new M1 chip has 16 billion. Fabrication technologies, which made it possible to shrink transistors, therefore were crucial to technological advance.

Now, however, the process of shrinking transistors “will reach fundamental limits . . . at the end of this decade,” the IRDS roadmap predicted in 2020. It is implausible that technological progress will simply stop then. The IRDS argues that quantum technologies—which offer the prospect of computers that solve certain problems orders of magnitude more rapidly than today’s computers—may at that point begin to be broadly applied, though other experts are skeptical that quantum computing will find commercial application in such a short time horizon. What is clear is that the current model of semiconductor manufacturing faces potential disruption. Rather than spending money trying to replicate existing fabs in South Korea and Taiwan, we should be exploring next-generation technologies that would leapfrog them. This isn’t just smart technology policy. Its also crucial for computing technology to continue progressing.

Some experts are expecting design, a field where the United States leads, to play a progressively larger role in driving performance improvements as transistor shrinkage slows. The more that design, rather than manufacturing, drives performance, the less strategic manufacturing processes become.

Similarly, the techniques needed to improve manufacturing processes may not necessarily come from the companies that manufacture chips. As previously discussed, fabs in China, Taiwan, and South Korea all heavily rely on equipment produced in the Netherlands, Japan, and the United States. Building on existing U.S. strengths in manufacturing equipment is more likely to yield economic and strategic benefits than trying to subsidize our way to higher market share in fabrication, especially as fabrication itself faces looming technological challenges. Finally, although experts are divided on whether quantum computing techniques will gain practical applicability in the coming decade, if quantum computing materializes, it would transform the industry. Given existing U.S. strengths in quantum computing research in academia and at corporations like IBM, Microsoft, Intel, and Google, this is an additional area where additional U.S. government investments could have a meaningful impact.